ELE522: Large-Scale Optimization for Data Science

|

Course Description

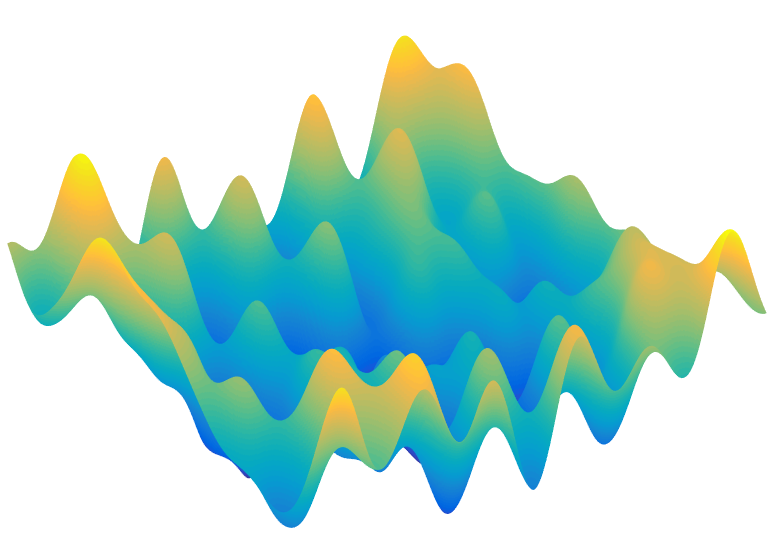

This graduate-level course introduces optimization methods that are suitable for large-scale problems arising in data science and machine learning applications. We will first explore several algorithms that are efficient for both smooth and nonsmooth problems, including gradient methods, proximal methods, mirror descent, Nesterov's accelerated methods, ADMM, quasi-Newton methods, stochastic optimization, variance reduction, as well as distributed optimization. We will then discuss the efficacy of these methods in concrete data science problems, under appropriate statistical models. Finally, we will introduce a global geometric analysis to characterize the nonconvex landscape of the empirical risks in several high-dimensional estimation and learning problems.

Lectures

Mon, Wed 3:00 PM - 4:20 PM.

Room: Sherrerd 101.

Teaching Staffs

Instructor: Yuxin Chen, C330 Equad, yuxin dot chen at princeton dot edu

Teaching assistant: Yuling Yan, yulingy at princeton dot edu

Announcements

9/27: Homework 1 is out (see Blackboard). It is due on Wednesday, Oct. 9